Bullshit context and the Meta AI

Using Meta's LLM for background information is really that bad

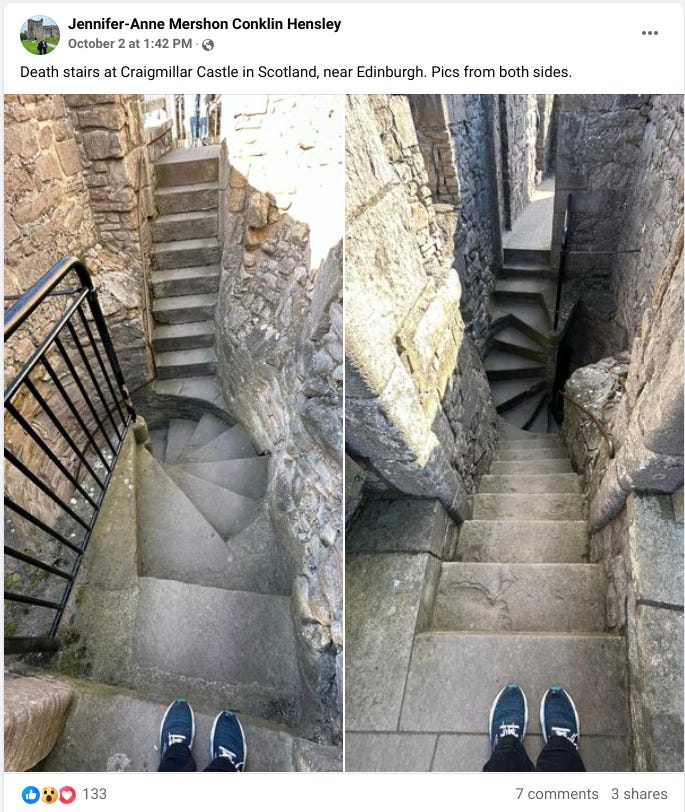

This morning a picture came up on Facebook of some stairs at a castle.

That’s interesting, I thought. I wonder why they are called death stairs? This is the sort of “overlay” question I’ve been talking about relative to AI and LLMs. So I thought why not see what Meta’s AI chat gives me here.

Boy, was that a mistake.

Let’s get to the end of the story first. After the interaction I am about to show you I decided to just SIFT the photo and found out that this Facebook post is the only post on the entire internet that calls these “death stairs”. That took 5 seconds. I then put in “death stairs” and found on the first page of results an article talking about the Facebook group “death stairs” — a group where people take pictures of dangerous looking stairs and share them with the group. I realized that was what had happened here. A member of the Death Stairs Facebook group saw a set of stairs at a castle and said hey, check out these death stairs.

So that’s the real story — delivered by the SIFT method in approximately 1 minute.

So what did Facebook tell me? Get ready for a ride.

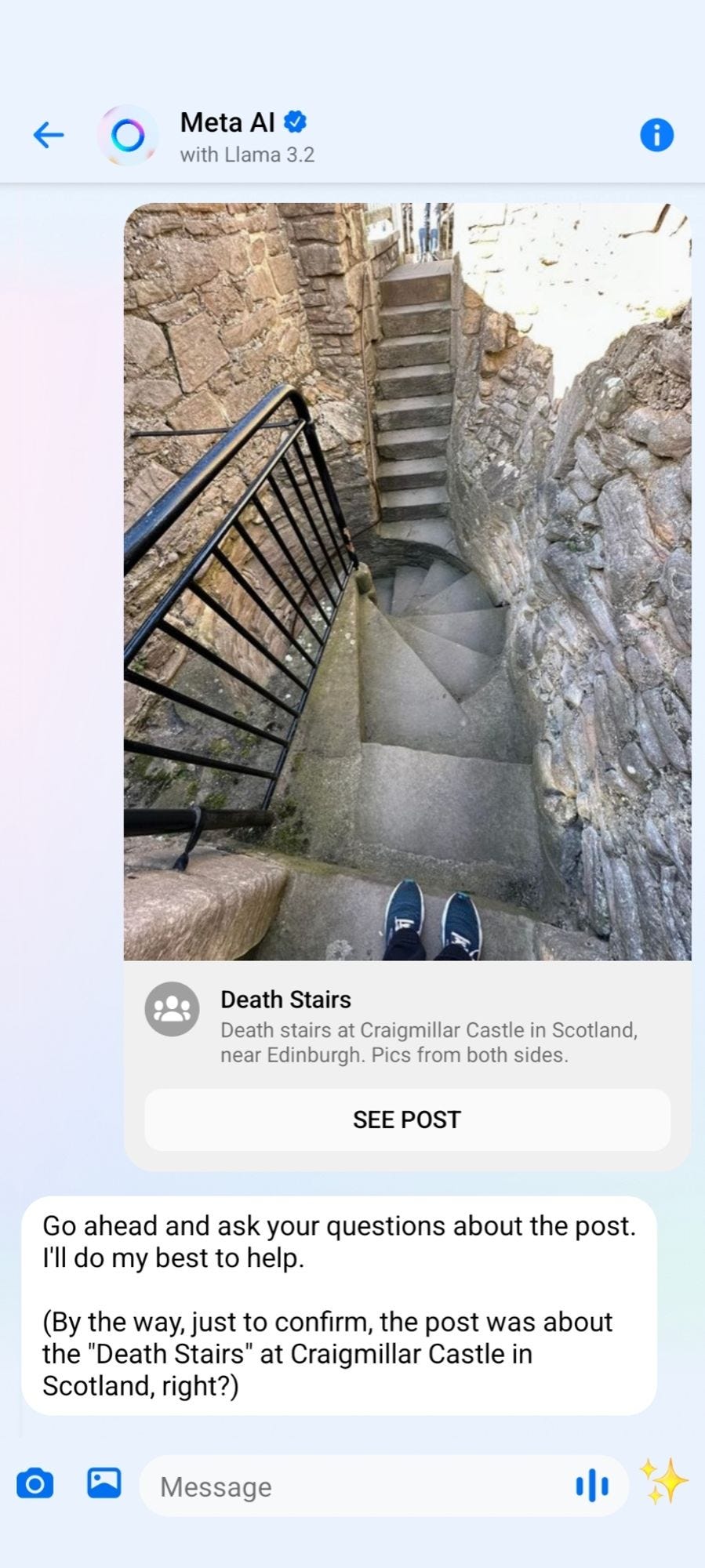

First it let me know I could ask questions about the post. Great.

Then is let me know these stairs were “infamous”. Fascinating! Would I like to know their legends and history?

Sure, I said, why are they called that?

Well, it says, they got their name because of this set of features. The features aren’t off here, but actually seem lifted from a description of servant staircases in Victorian England. And keep in mind, this is an explanation of why these “infamous” stairs that aren’t infamous are named something that they are not named.

It keeps going of course. By the third paragraph there’s a series of deaths potentially associated with the stairs. There’s also a whole theory on how the dangerous nature of the steps might have been intentional.

The stairs might have been built in a dangerous way to protect against sieges! Or they might have been designed as a test of the “worthiness” of visitors to the castle!

It suggests to me the question “How many people have died?”

Keep in mind that the stairs are actually not a thing and associated with no known deaths.

Well, Meta can’t find a comprehensive guide to all deaths on those stairs, but some possible suggestions include the Earl of Mar (who research reveals was imprisoned at the castle and likely assassinated) might be related to these stairs. It also suggests that a double homicide in an apartment in Edinburgh where a father and son were killed after being beaten with hammers and stabbed with a knife might be related to the stairs. Some suicides in the neighborhood might also be of interest, and it offers to fetch crimes statistics.

I just want to reiterate — this was not a gotcha question, and this is not cherry picking a bad result. This is my one interaction with the AI in over a month. The questions I asked were the questions it suggested I ask. And it is 100% bullshit.

Again, I know why companies are doing this — the race for the overlay is real. But I also wonder how bad it would have to get for them to pull it, if performance this bad hasn’t pulled it already.

Exactly. And this kind of thing happens to me quite often, actually. I'm trying to figure out why other people don't mind or don't care about this frequency of hallucination!

Your experience is one example of why I have deep skepticism whenever I see the 100th claim of the month that "AI (presumably LLMs) is going to change everything"...I assume the idea is change for the better. I'm just another old Unix hacker, which probably makes me unusually obdurate, but I'm having serious difficulty buying into the hype of a miraculous application that has to be continually fact-checked.