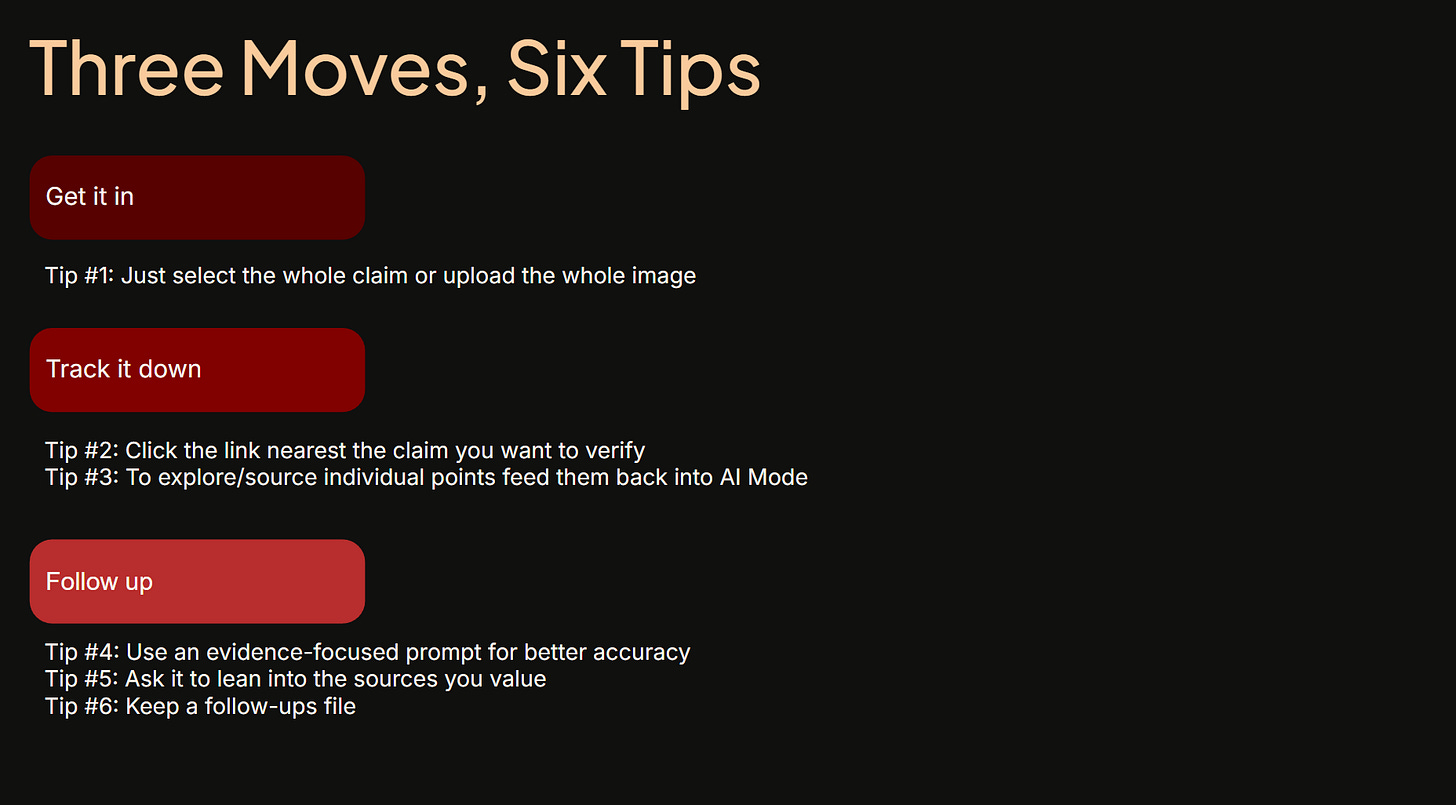

Get it in, track it down, follow up

Three essential moves to using AI for verification and contextualization, with a bit of LLM-specific guidance

I’ve been putting off writing a blog post on some exploration I’ve been doing, developing AI moves for students. That “SIFT for AI” thing people keep asking me for. And that delay has been for one of my commonest reasons for delay — I’ve been working this thing so long that that I have 100 things to say about it that I now have to whittle down to 10 to 15 paragraphs.

I haven’t been able to do that, obviously.

In the interim I figured I would at least show you how I am approaching this issue lately. So here’s a video, pretty off-the-cuff.

The idea here is not to make everyone an excellent super-prompter. I think that would be an interesting course to teach, but there are approximately 1 million people on LinkedIn trying to sell people that exact service.

The question I’m trying to address is if we had a couple 45 minute sessions with students what could we show that that would make them significantly better at using AI to verify and contextualize things they see online. What could we show them that not only gets learned but gets done when they leave the classroom? That operates as habit, not understanding?

What I’ve landed on is three things.

Get it in. One thing we found with SIFT was the biggest barrier was not search skills, but building the habit of checking at all. If you could get them to the search page, that was 90% of the battle. The key to getting most people to build a habit of checking things with AI is — somewhat paradoxically — have them see the first query as just a first round, something that doesn’t have to be perfectly crafted or engineered. In the examples I’ve tried, quite often the best move is just to select what you’re looking at and push it up to the AI.

Track it down. Part of my challenge was to get this all down to three moves, and think quite carefully about what are the three most important things you really need to hammer on with students. It became evident that “tracking it down” — following citations in the AI to sources and verifying those sources are correctly described — had to be one of the three. I don’t think people need to track down everything, and I don’t think they have to treat AI search like a Zotero-powered research session. But there are simple things people can do to find grounding and we should let students know that for serious work or questions with stakes this is part of the work.

Follow up. Again, there’s a million things we could select here to tell students. But one of the biggest errors I see is that people either take the first result they get or start to argue back and forth with the LLM in unproductive ways. I think teaching students how to use an “evidence-focused follow-up” that can shape the response and often boost its accuracy or nuance has to be core to any short lesson. The first pass with an LLM is often like the first search result you get back. Maybe it’s perfect. But maybe it’s not, and the best thing you can do is follow-up to sharpen, shape, refine, or otherwise produce a response that has greater accuracy or better meets your needs.

Anyway, it’s all in the video. I know that’s an imperfect format that lacks concision, but if you want to see how this maps onto practice, that’s where you’re going to see it for the time being.

Thanks Mike! This is really helpful and easy enough to explain to students. I appreciate your comment that the just “get it in” stage is 90% of the battle. That initial feeling of “could this really be true?”skepticism. That’s everything. (I had a friend tell me with confidence that mosquitoes only bite smart people- she had read it somewhere and there was no skeptical questioning at all.) I tried to convince her to just do some follow up checking on it but she seemed uninterested at that point. I guess also didn’t want to argue with an invertebrate zoologist.

Mr Caulfield - this is rad! I love that its behavioral focused. I am totally interested in the theory behind this stuff...but just get kids practicing AI/Digital literacy and get them interested in the theory after.

I am relatively new to your Substack. Is the site in the video that you used to pick a claim to fact check available to all, or something you use privately? I would love to use it, or something like it, with my students. Thanks!