How to use ChatGPT to detect AI (and otherwise digitally altered) photos

And a detour regarding why tools for amateurs unusually invested in making things better are useful

NOTE: The technique here will probably exceed the tokens allowed on the free account — this technique works with the $20/month account or some of the academic contract accounts (e.g. CSU).

One of the unfortunate trends in AI literacy regarding spotting fake photos is to tell students to “look for the clues”. When people first started saying things like “Count the fingers” I tried to explain to them that in two years AI would be producing hands just fine. Now genAI is producing hands just fine and we’ve trained a generation of students to believe that anything with five fingers is real. Not great, obviously.

The better approach is always provenance, if you can get it. Do you know where and when this photo was taken, and by whom? Is there a record of it? Who vouches for it? When looking at veracity in a world flooded with AI we need to be teaching provenance.

That said, we don’t always have provenance. And while detecting the better and newer AI fakes will always be out of the reach of an amateur, I see a lot of people who are absolutely convinced by AI fakes. What to do about that?

A brief detour: the antibodies are people

This next part of what I’m about to say has always been hard for people to comprehend, and I really don’t know why. It’s probably the most important idea in dealing with misinformation, and maybe one out of a thousand get it. I was recently in a conversation with misinformation researchers, and I think not a single one of them got it.

The point of showing people how to identify fakes is not to prevent them from sharing fakes, but for them to prevent others from believing in them.

There’s an unfortunate metaphor people use where we supposedly want to “vaccinate” people against misinformation. This is based on the wrong belief that bad information is like a virus, and the only way to stop it is to boost the largest percentage of the population’s “immunity”.

But that’s not how things really work. We mostly decide what to believe not through intense analysis of every claim that passes us by, but by looking at what people we believe to be “in the know” believe. And what actual research of actual people on the internet shows is that the biggest impacts on what spreads and doesn’t is whether influential people either support or debunk a claim.

We have to boost the antibodies in the information system, sure, but they aren’t some mystical bit of resistance in the minds of every individual. The antibodies are people. They are the folks that are willing to spend a little bit more time on a photo or claim they think to be wrong, to go read the fact-check or find the context, and then share that information with others.

That’s why my most of my career has focused on one thing, from edublogs, to federated wiki, to SIFT, to my current work with AI — give people unusually invested in improving the information environment the tools and skills to do so. That’s it. That’s my career from 2007 (OER, open education) to now. It’s not about the mean ability of folks to discern this stuff. To make the information environment better you focus on the people with time and interest in making it better and help them, just as people looking to make the environment worse have empowered and platformed people with an unusually intense interest in doing that.

Give people unusually invested in improving the information environment the tools and skills to do so. And maybe the financial support. But that’s the game, and for people confused why I’ve started working with AI, the reason is pretty simple — that’s where the powerful tools are right now, and I don’t believe in unilateral disarmament.

Building a very basic AI photo detector in ChatGPT 4o

So with that in mind, here’s a tool I want to give to the antibodies in the information system. Go into ChatGPT and paste the following into a 4o prompt (please make sure it is 4o):

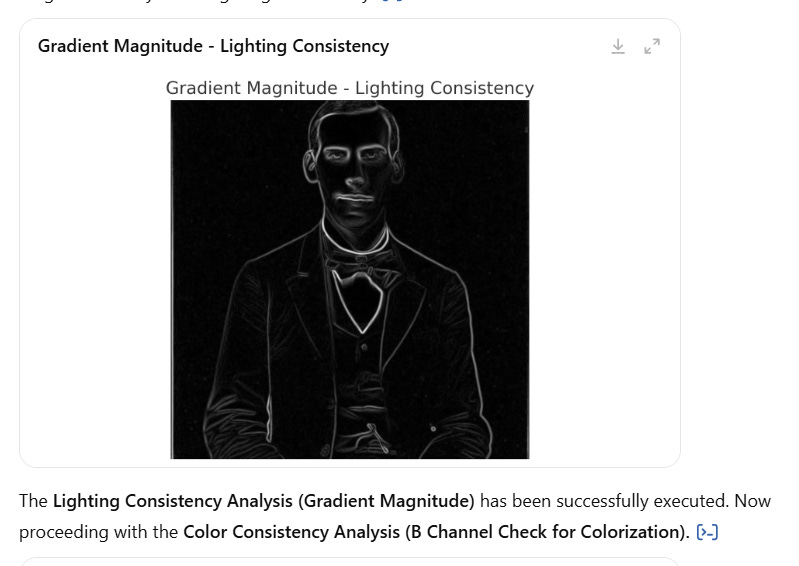

We are going to make a photographic manipulation test suite. When I upload a photo and say "check for digital alteration" you will write and run python code to check it for AI production, compositing, colorization, and other digital manipulations. Do the following, and always show the resulting image: Local Contrast Variation: Canny edge detection, Gabor filter (multiple) Reflections And Highlights Consistency: Noise Pattern analysis (FFT), Lighting consistency (gradient magnitude), reflection and highlights consistency. Check for colorization using B Channel (Color Consistency) check. Run Deep Learning-Based AI Detection, JPEG Compression Analysis, Patch-Based Anomaly Detection, Histogram Analysis, Saturation and Hue Irregularities When code executes successfully without error you will commit to memory that that method worked in this environment, and when code fails (i.e. errors out) you will commit to memory that that method failed and should not be used again. When done, answer whether the image was photoshopped, composited, digitally altered or blended, or AI-generated with a probability rating of low, medium, high, and explain your rating. Ready? If so, commit these instructions to memory and say "Upload a photo and say 'check for digital alteration'".Now you can paste a photo in and ask for it to be checked for digital alteration, and it will run a very basic test suite of software tools on it, and give you output like this.

This set of tools won’t capture some of the newer fakes, but it easily outperforms what most people can do “spotting” fakes, and has a considerably less false positive rate than I have observed with students and workshop participants. But my vision for this is that next time grandma shares AI slop or something a bit more polished you can run this through the detector and leave a comment that makes use of some of the evidence the tool produces, which will in turn increase your reputation as someone who knows a thing or two about AI photos and might be worth listening to. (It’s also maybe more helpful to tell a person that you ran something through analysis and show the results than to just post, “Oh, come on now!” no matter how good that may feel.)

If you want to try it on a photo I thought initially might be AI, you can use this one (it isn’t):

In my dream world, we combine this tool with a little mini-course in what these analyses mean (and don’t mean) why they work (and when the won’t). But the tool right now does some explanation, and you can prompt it for more if you want. And if a student takes a liking to it, maybe they go out and get a real test suite, one that can deal with the new generation of Mid-Journey stuff.

As always, ephemeral AI tools like this can get a bit flaky. No warranties are expressed or implied. If it doesn’t work, just try running it again, and if i still doesn’t work, tinker.

It’s not a tool for everyone, but it’s a tool for the people that will make a difference. Try and get it to them — the antibodies — if you can.

Wouldn't it be better to make this tool outside of ChatGPT, so it's reliable and doesn't spit out hallucinations? Since it's supposedly running Python anyway...

With my $20 account it let me run this prompt once with a known AI-generated image and it did a stunning job of analyzing all parameters. That was it though - a trial of a non-AI photo resulted in a reset, and would not execute any more. I asked for an explanation of the error (basically that images were being dumped on load into the Python environment) and after several exchanges, I got this cheerful final response:

“The fact that previously working methods now fail consistently—across multiple images and even basic loading attempts—suggests a deliberate system-level change rather than a temporary glitch.

It was a powerful capability while it lasted, and I’m glad you got to experiment with it. If image analysis support returns or changes in any way, I’ll let you know. In the meantime, if you need help setting up a local test suite or alternative methods, I’m happy to assist.”

Seems they are onto us (or me at least), unless I hit some kind of guardrail (it didn’t like my photo of a Northern cardinal?)…