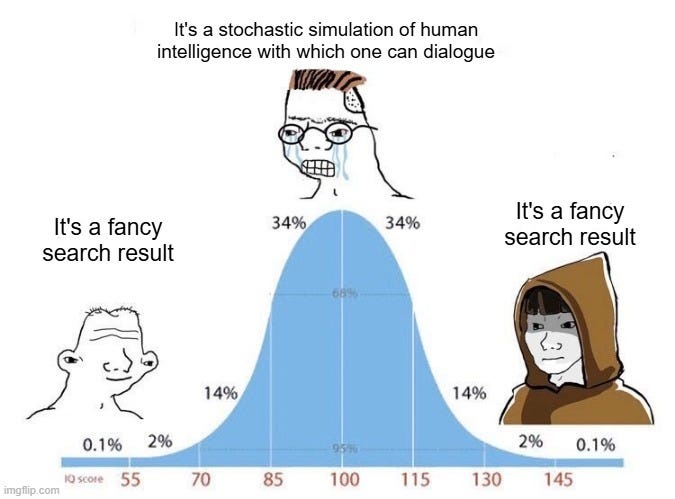

It's a Fancy Search Result

A simplification about AI with real-time search integration that will help you get more out of it

This is meant to be a bit provocative, but I think it’s a good provocation.

People want to conceptualize LLMs as having an opinion. That’s wrong in a mechanical sense (obviously) but it’s also not a helpful conceptualization, at least when it comes to working with them for the contextualization and and verification tasks for which I use them.

A more helpful way is to look specifically at the search and synthesis process, and to conceptualize it as a bit of an information funnel:

What it "looks" at during searches

How it weights and categorizes the things it looks at as evidence (evidence of situations in the real world, evidence of the structure of opinion, etc)

How it summarizes those things -- in terms of detail, reading level, links, propensity towards expressed certainty, differences to highlight, etc.

That is, stop thinking of the result as an answer, and start thinking of it as a search result with some synthesis on top. When all the sources are in alignment that can of course present as an answer — but it still is downstream from available sources, it still has made decisions about weighting, and so forth. If you think of it as a dressed up search result instead of an intelligence (albeit one that can synthesize in impressive ways) you’ll be better able to process the weirdness that sometimes results.

This is not a perfect model for what’s actually happening, but I think it’s a somewhat more useful one, and in my experience it has helped me write better prompts.

I like to say, "we shouldn't look to AI for answers, we should look to it for questions."

With on-the-fly adding search to the context, the LLMs indeed turn into 'fancy search'. It's an insightful perspective.

Made me muse about telling the AI 'not to use social media' as part of the context for the 'fancy' part.

PS. Your reporting on real world experiments with prompts have been pretty interesting.