Working on an annotating fact-checker

Right now I'm focused on film, but it has a general application. Also: some notes on AIO.

The reason I’ve been silent for a bit is I’ve been working on a variation of my Deep Background prompt. The forked version now checks not just a single claim but two to three paragraphs of body text for errors and annotates it.

I’ve then been using that to check AI Overview output to get a sense of the structure of error on that platform.

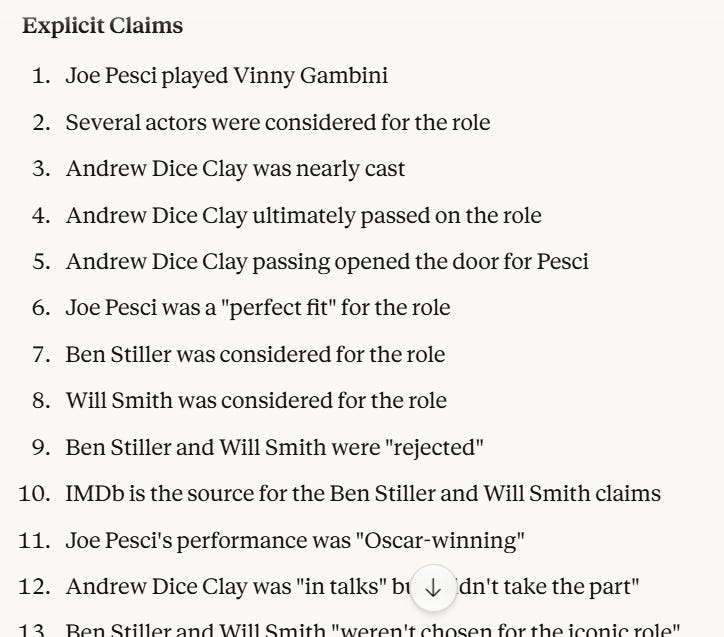

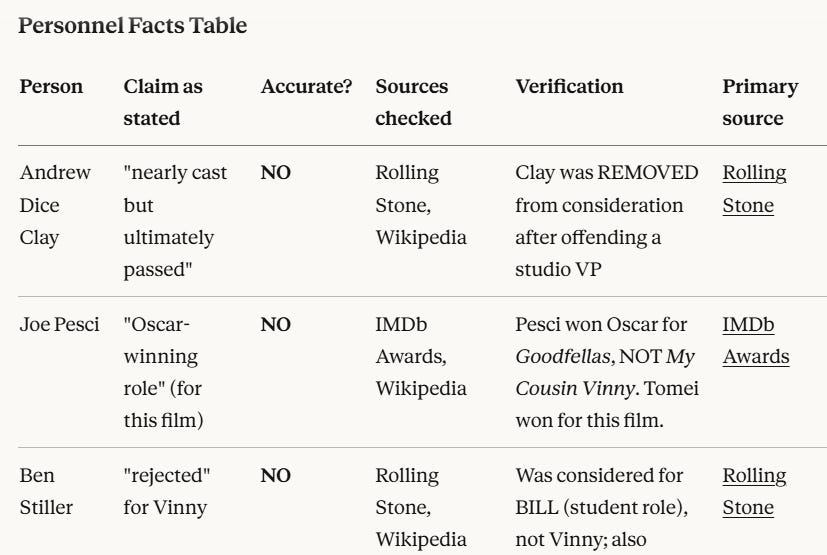

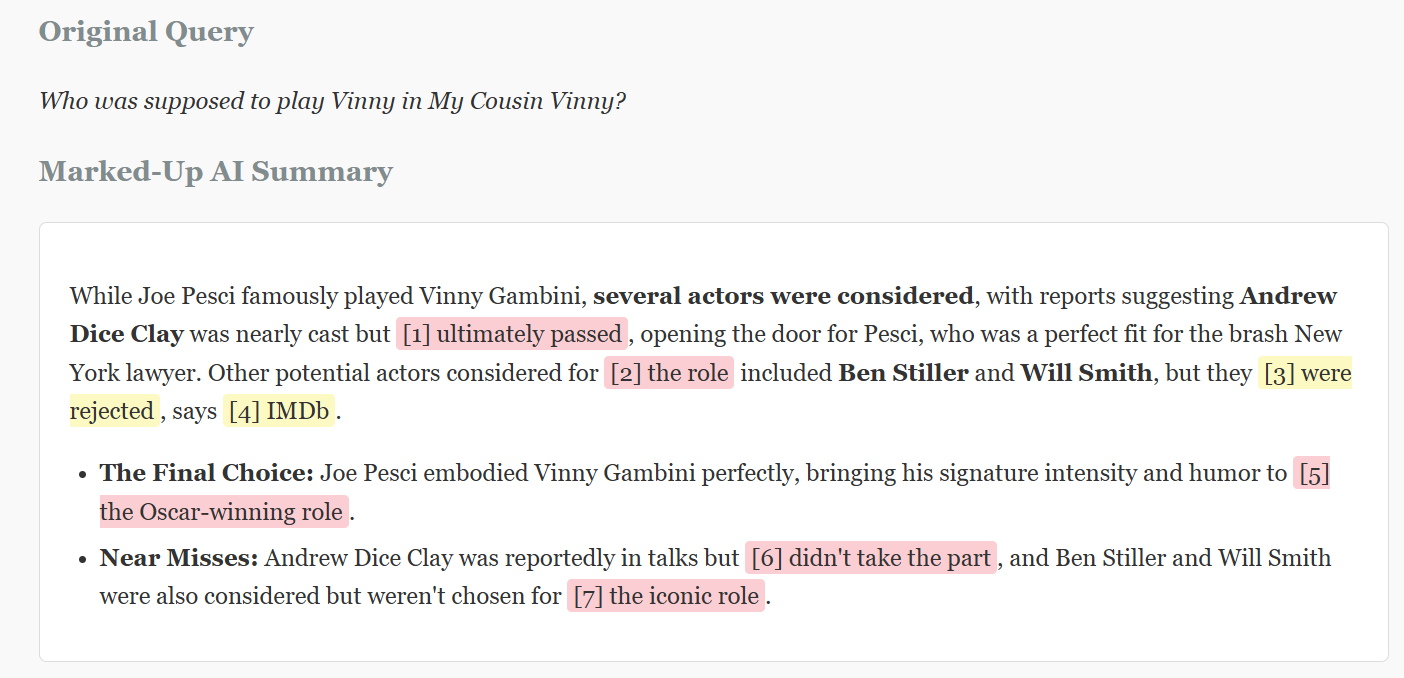

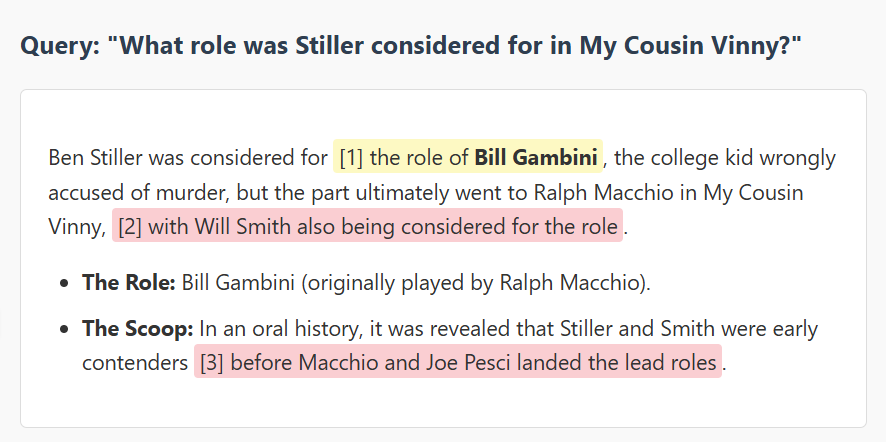

To do this, I’ve been testing it using examples about film and TV. This turns out to be a pretty productive testing space. Here’s the result for the question “Who was supposed to play Vinny in My Cousin Vinny?”. We take the output of AI Overview and have the prompt (which I’m calling “Film Stop” right now) do a variation on Deep Background and break it down into atomic claims:

Run some specific roles stuff

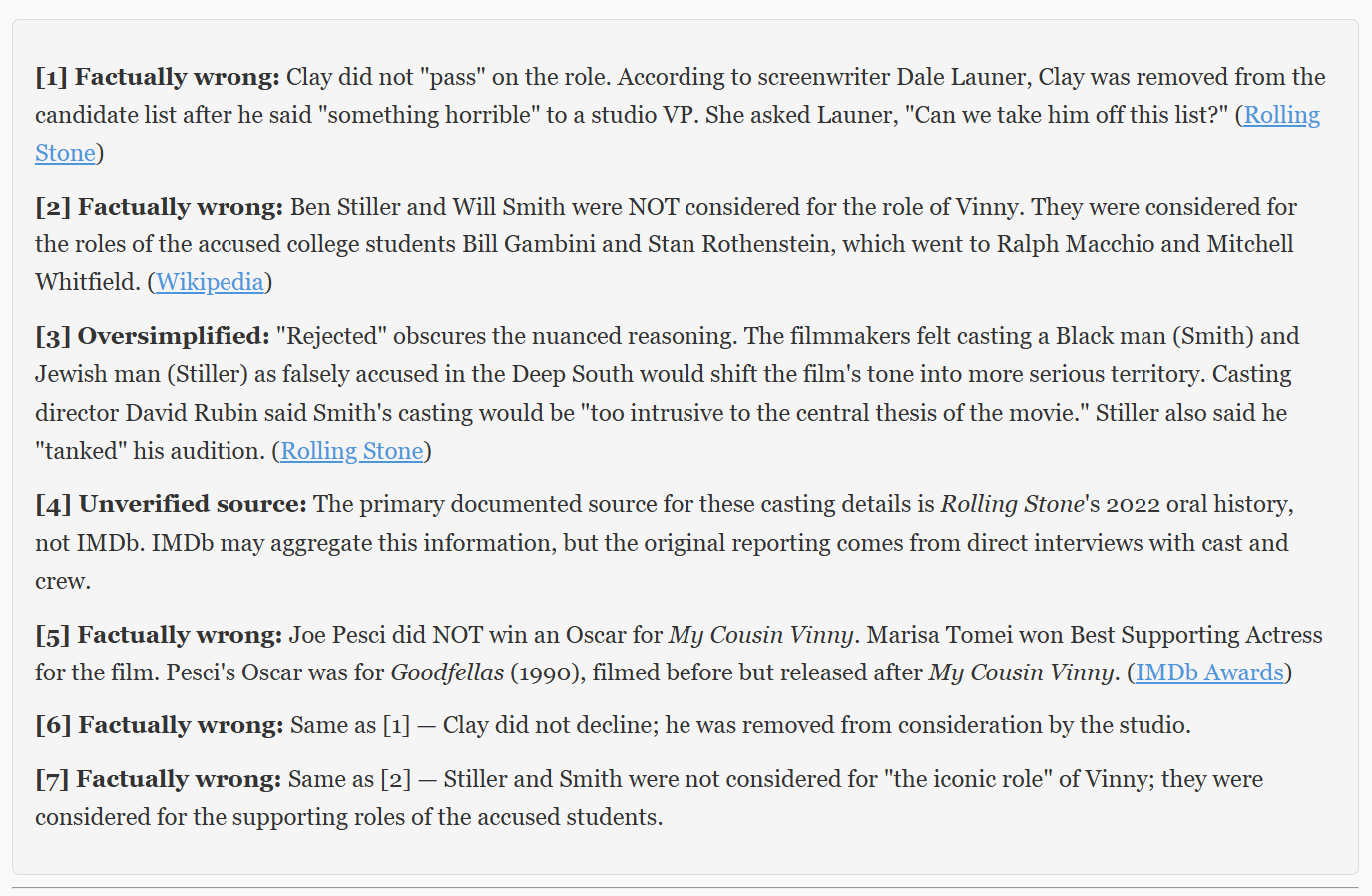

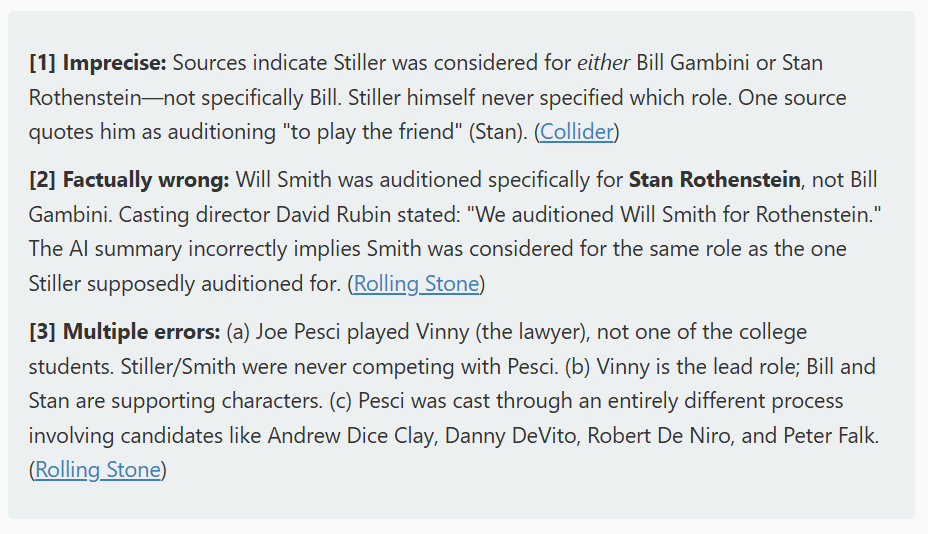

Then outputs an HTML report, including an annotated markup…

What I have I learned so far? First, while it makes some errors, Film Stop is pretty impressive. The prompt text is a mess right now with so much cruft in it from Deep Background, but it’s already working really well.

Secondly (and not a surprise) AI Overview gets things wrong constantly, at least when I’m asking it questions on film and TV. To me that feels like an opportunity for exploration. The above level of error is not abnormal; it’s a bit above the mean, but well within the normal range of error for AIO. It fabricates an Oscar for Pesci, whiffs on why Clay was not cast, and gets the roles Stiller and Smith were considered for terribly wrong. The question I’m asking here is not an obscure or specially constructed to cause error. In this case it was a suggested follow-up query Google fed me. You can produce this level of error all day long.

Finally, there’s an important behavior here around what I’d call “foreground” and “background” content behavior in AIO (roughly, but not exactly, a match for “at-issue” and “not-at-issue” content in linguistics). For instance if we drill down on that Ben Stiller error and ask directly which role Stiller auditioned for, AIO gets that right (the foreground content) but then gets the background content wrong:

There’s a little mushiness in the checker right now, and I’m obviously not saying you can blindly trust it. That’s what the links are for, after all.

But on a more interesting note, you can really do this all day long, leapfrogging foreground and background content, and mapping out error paths:

Get a foreground response that’s good

Check the background content and find out a good 10-25% of it is wrong

Ask about the background content it got wrong and (usually) get a good or at least better answer now that it is foreground content

Check the background content on that answer and find out 10-25% of it is wrong

Make up a question about that background content and watch it get the foreground content right and the background content wrong.

And so on. And that’s what I’ve been doing lately. I’m not sure where I’m going with that, but I’m finding some really interesting stuff on the structure of error, so more on that later.

TY! All of this, your effort, your detailed explanation. Great to have and read and consider, as an educator and someone not at all making an effort to create this service. Would I use it? Yes. Can I create it? No. Are you seeking a good from this technology? I can see a good use for it and my students, yes. TY!

Have you tried recursive prompting?